tidb-operator

概述

tidb是一个分布式的数据库,tidb-operator可以让tidb跑在k8s集群上面。

本文主要验证tidb使用local pv方式部署在k8s集群上面。

安装

实验环境的k8s环境共有三个节点

1 | [root@kube01 ~]# kubectl get nodes |

tidb安装过程中需要用到6个pv,pd需要用到3个1G的pv,tikv需要用到3个10G的pv。

环境中有一块20G的空闲磁盘vdc,我们把vdc分成两个分区,一个2G,一个18G。

1 | [root@kube01 ~]# sgdisk -n 1:0:+2G /dev/vdc |

tidb-operator默认会把挂载在/mnt/disks/vol$i 的分区作为一个PV,所以把我们vdc1和vdc2这两个分区挂载在vol0和vol1下。

tidb推荐使用ext4文件系统

1 | mkfs.ext4 /dev/vdc1 |

通过tidb-opertor提供的local-volumen-provisioner可以把前面的分区变成local pv

1 | [root@kube01 local-dind]# kubectl apply -f local-volume-provisioner.yaml |

通过helm安装tidb-operator

需要修改charts/tidb-operator/values.yaml文件中

kubeSchedulerImage: gcr.io/google-containers/hyperkube:v1.13.4

镜像的版本和kubelet的版本一致。

1 |

|

通过helm安装tidb-cluster

1 |

|

通过mysql client进行验证

1 | [root@kube01 tidb-operator]# kubectl port-forward svc/demo-tidb 4000:4000 --namespace=tidb |

参考

Ceph对象存储使用纠删码存储池

概述

本文主要验证ceph对象存储使用纠删码的情况

本文中纠删码的配置K+M为 4+2,理论上可以容忍M个osd的故障ceph

配置

创建erasure-code-profile和crush rule

1 | [root@ceph04 ~]# ceph osd erasure-code-profile set rgw_ec_profile k=4 m=2 crush-root=root_rgw plugin=isa crush-failure-domain=host |

1 | [root@ceph04 ~]# ceph osd crush rule create-erasure rgw_ec_rule rgw_ec_profile |

由于实验环境只有3个节点,需要调整crush rule,先选择3个host,再在每个host选择两个osd

1 | ceph osd getcrushmap -o crushmap |

1 | rule rgw_ec_rule { |

1 | crushtool -c crushmap.txt -o crushmap |

由于环境中还没有任何数据,我们先停止rgw,然后把默认的default.rgw.buckets.data存储池删掉,再创建一个纠删码的default.rgw.buckets.data存储池

1 | [root@ceph04 ~]# ceph osd pool create default.rgw.buckets.data 64 64 erasure rgw_ec_profile rgw_ec_rule |

可以看到默认创建的存储池的size是k+m=6, min_size=k-m+1=5, 当存储池的当前size小于min_size的时候,pg会出现incomplete的情况,所以在还需要调整存储池的min_size为4,这样就可以容忍2个osd节点故障。

1 | [root@ceph04 ~]# ceph osd pool ls detail |

验证

创建对象存储用户,并用s3cmd进行验证

1 | [root@ceph04 ~]# radosgw-admin user create --uid=test --display-name=test |

1 | [root@ceph04 ~]# s3cmd ls s3:// |

停掉2个osd,pg也没有出现incomplete的状态, 通过s3cmd也可以正常上传下载

1 | [root@ceph04 ~]# systemctl stop ceph-osd@0 |

参考

Rook

概述

本文主要介绍如何通过rook在k8s上部署一套ceph集群。

测试的k8s集群一共三个节点:

1 | [root@kube01 ~]# kubectl get nodes |

Rook部署

clone rook代码

1 | git clone https://github.com/rook/rook.git |

通过kubectl执行rook-ceph的operator

1 | [root@kube01 rook]# kubectl apply -f cluster/examples/kubernetes/ceph/operator.yaml |

等待全部pod都running状态

1 | [root@kube01 rook]# kubectl get pods -n rook-ceph-system -owide |

ceph会使用每个节点的vdb作为osd,所以需要修改cluster/examples/kubernetes/ceph/cluster.yaml的内容

1 | storage: # cluster level storage configuration and selection |

通过kubectl部署ceph集群

1 | [root@kube01 rook]# kubectl apply -f cluster/examples/kubernetes/ceph/cluster.yaml |

等ceph部署完成,可以看到有三个mon,一个mgr和三个osd

1 | [root@kube01 rook]# kubectl get pods -n rook-ceph |

安装ceph-tool,登录到ceph-tools的pod,可以执行ceph相关的命令,查看ceph状态

1 | [root@kube01 rook]# kubectl apply -f cluster/examples/kubernetes/ceph/toolbox.yaml |

登录到ceph-tools Pod

1 | [root@kube01 rook]# kubectl exec -it rook-ceph-tools-544fb656d-tddrx bash -n rook-ceph |

部署好的ceph集群并没有rgw服务,通过下面的方式可以添加rgw服务

1 | [root@kube01 rook]# kubectl apply -f cluster/examples/kubernetes/ceph/object.yaml |

通过下面的方式可以把rgw服务以NodePort的方式对外提供服务

1 | [root@kube01 rook]# kubectl apply -f cluster/examples/kubernetes/ceph/rgw-external.yaml |

下面的部署安装ceph mds

1 | [root@kube01 rook]# kubectl apply -f cluster/examples/kubernetes/ceph/filesystem.yaml |

cluster/examples/kubernetes/ceph/ 目录下还有其他yaml文件,可以对ceph集群进行其他操作,比如启用mgr dashboar,安装prometheus监控等

1 | -rwxr-xr-x 1 root root 8139 Mar 15 15:20 cluster.yaml |

参考

Ceph RGW multi site 配置

概述

本文主要介绍如何配置Ceph RGW的异步复制功能,通过这个功能可以实现跨数据中心的灾备功能。

RGW多活方式是在同一zonegroup的多个zone之间进行,即同一zonegroup中多个zone之间的数据是完全一致的,用户可以通过任意zone读写同一份数据。 但是,对元数据的操作,比如创建桶、创建用户,仍然只能在master zone进行。对数据的操作,比如创建桶中的对象,访问对象等,可以在任意zone中 处理.

环境

实验环境是两个ceph集群,信息如下:

集群ceph101

1 | [root@ceph101 ~]# ceph -s |

集群ceph102

1 | [root@ceph102 ~]# ceph -s |

这两个ceph集群都一个rgw服务,本次实验就通过这两个ceph集群验证rgw multi site的配置,已经功能的验证。

本次实验已第一个集群(ceph101)做为主集群,ceph102作为备集群。

Multi Site 配置

在主集群创建一个名为realm100的realm

1 | [root@ceph101 ~]# radosgw-admin realm create --rgw-realm=realm100 --default |

创建master zonegroup

1 | [root@ceph101 ~]# radosgw-admin zonegroup create --rgw-zonegroup=cn --endpoints=http://172.16.143.201:8080 --rgw-realm=realm100 --master --default |

创建master zone

1 | [root@ceph101 ~]# radosgw-admin zone create --rgw-zonegroup=cn --rgw-zone=shanghai --master --default --endpoints=http://172.16.143.201:8080 |

更新period

1 | [root@ceph101 ~]# radosgw-admin period update --commit |

创建同步用户

1 | [root@ceph101 ~]# radosgw-admin user create --uid="syncuser" --display-name="Synchronization User" --system |

修改zone的key,并更新period

1 | [root@ceph101 ~]# radosgw-admin zone modify --rgw-zone=shanghai --access-key=LPTHGKYO5ULI48Q88AWF --secret=jGFoORTVt72frRYsmcnPOpGXnz652Dl3C2IeBLN8 |

删除默认zone和zonegroup

1 | [root@ceph101 ~]# radosgw-admin zonegroup remove --rgw-zonegroup=default --rgw-zone=default |

删除默认pool

1 | [root@ceph101 ~]# ceph osd pool delete default.rgw.control default.rgw.control --yes-i-really-really-mean-it |

修改rgw配置, 增加rgw_zone = shanghai

1 | [root@ceph101 ~]# vim /etc/ceph/ceph.conf |

重启rgw,并查看pool是否创建

1 | [root@ceph101 ~]# systemctl restart ceph-radosgw@rgw.ceph101 |

在secondy zone节点进行如下配置:

同步realm, 并设置realm100为默认的realm

1 | [root@ceph102 ~]# radosgw-admin realm pull --url=http://172.16.143.201:8080 --access-key=LPTHGKYO5ULI48Q88AWF --secret=jGFoORTVt72frRYsmcnPOpGXnz652Dl3C2IeBLN8 |

更新period

1 | [root@ceph102 ~]# radosgw-admin period pull --url=http://172.16.143.201:8080 --access-key=LPTHGKYO5ULI48Q88AWF --secret=jGFoORTVt72frRYsmcnPOpGXnz652Dl3C2IeBLN8 |

创建secondy zone

1 | [root@ceph102 ~]# radosgw-admin zone create --rgw-zonegroup=cn --rgw-zone=beijing --endpoints=http://172.16.143.202:8080 --access-key=LPTHGKYO5ULI48Q88AWF --secret=jGFoORTVt72frRYsmcnPOpGXnz652Dl3C2IeBLN8 |

删除默认default zone, defaul zonegroup和 default存储池

1 | [root@ceph102 ~]# radosgw-admin zone delete --rgw-zone=default |

修改rgw配置, 增加rgw_zone = beijing

1 | [root@ceph101 ~]# vim /etc/ceph/ceph.conf |

重启rgw,并查看pool是否创建

1 | [root@ceph102 ~]# systemctl restart ceph-radosgw@rgw.ceph102 |

更新period

1 | [root@ceph102 ~]# radosgw-admin period update --commit |

查看同步状态

1 | [root@ceph101 ~]# radosgw-admin sync status |

1 | [root@ceph102 ~]# radosgw-admin sync status |

验证

在master zone 创建一个test用户,在secondy zone 查看信息

1 | [root@ceph101 ~]# radosgw-admin user create --uid test --display-name="test user" |

secondy zone 查看用户

1 | [root@ceph102 ~]# radosgw-admin user list |

在secondy zone 创建一个test2用户,在master zone 查看信息

1 | [root@ceph102 ~]# radosgw-admin user create --uid test@2 --display-name="test2 user" |

在master zone 查看

1 | [root@ceph101 ~]# radosgw-admin user list |

可以看到在master zone创建的用户,在secondy zone也可以看到

而在secondy zone创建的用户,在master zone看不到

通过test用户,在master zone 创建名为bucket1的bucket

1 | [root@ceph101 ~]# s3cmd mb s3://bucket1 |

在secondy zone查看

1 | [root@ceph102 ~]# radosgw-admin bucket list |

通过test用户,在secondy zone 创建名为bucket2的bucket

1 | [root@ceph102 ~]# s3cmd mb s3://bucket2 |

在master zone 查看

1 | [root@ceph101 ~]# radosgw-admin bucket list |

在master zone 上传文件

1 | [root@ceph101 ~]# s3cmd put Python-3.4.9.tgz s3://bucket1/python3.4.9.tgz |

在secondy zone 查看信息

1 | [root@ceph102 ~]# s3cmd ls s3://bucket1 |

在secondy zone 上传文件

1 | [root@ceph102 ~]# s3cmd put anaconda-ks.cfg s3://bucket2/anaconda-ks.cfg |

在master zone 查看

1 | [root@ceph101 tmp]# s3cmd ls s3://bucket2 |

停止master zone的grup,然后在secondy zone上创建存储桶

1 | [root@ceph101 tmp]# systemctl stop ceph-radosgw@rgw.ceph101 |

1 | [root@ceph102 ~]# s3cmd mb s3://bucket4 |

可以看到在secondy zone上并不能创建bucket,之前在secondy zone上创建bucket,也是把请求转到master zone上。

反之,停止secondy zone的rgw,在master zone也是可以创建存储桶

1 | [root@ceph102 ~]# systemctl stop ceph-radosgw@ceph102 |

1 | [root@ceph101 tmp]# s3cmd mb s3://bucket5 |

参考

Centos 磁盘扩容

概述

本文主要介绍如何在vmware环境中给centos7虚拟机进行扩容。

centos7默认磁盘用lvm管理,系统盘挂在一个xfs的lv上。

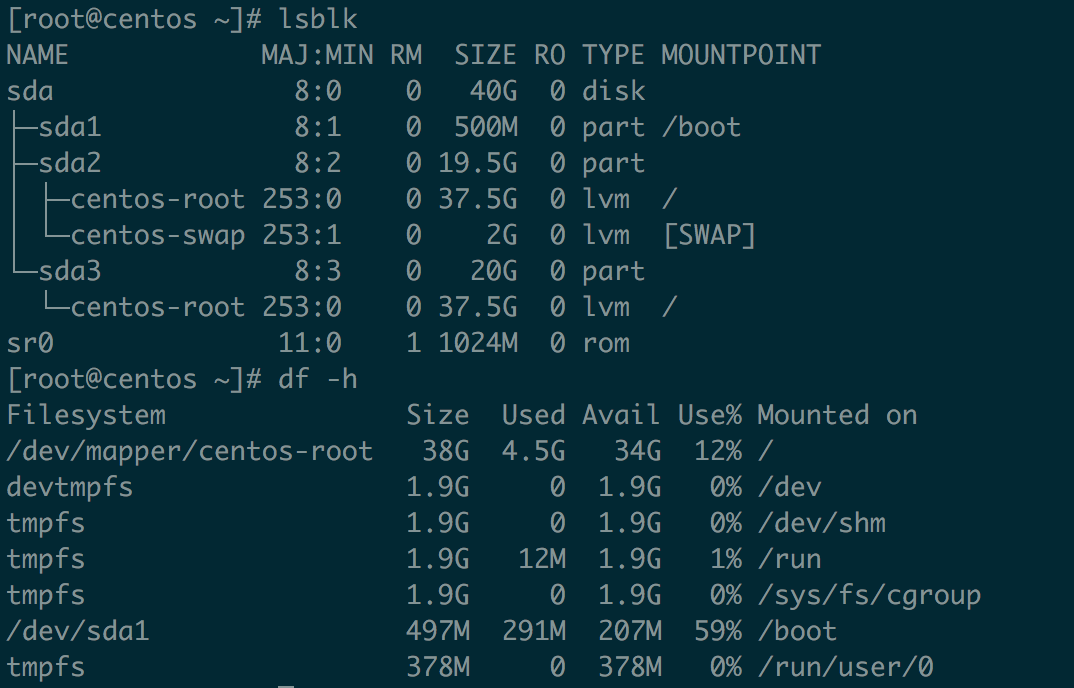

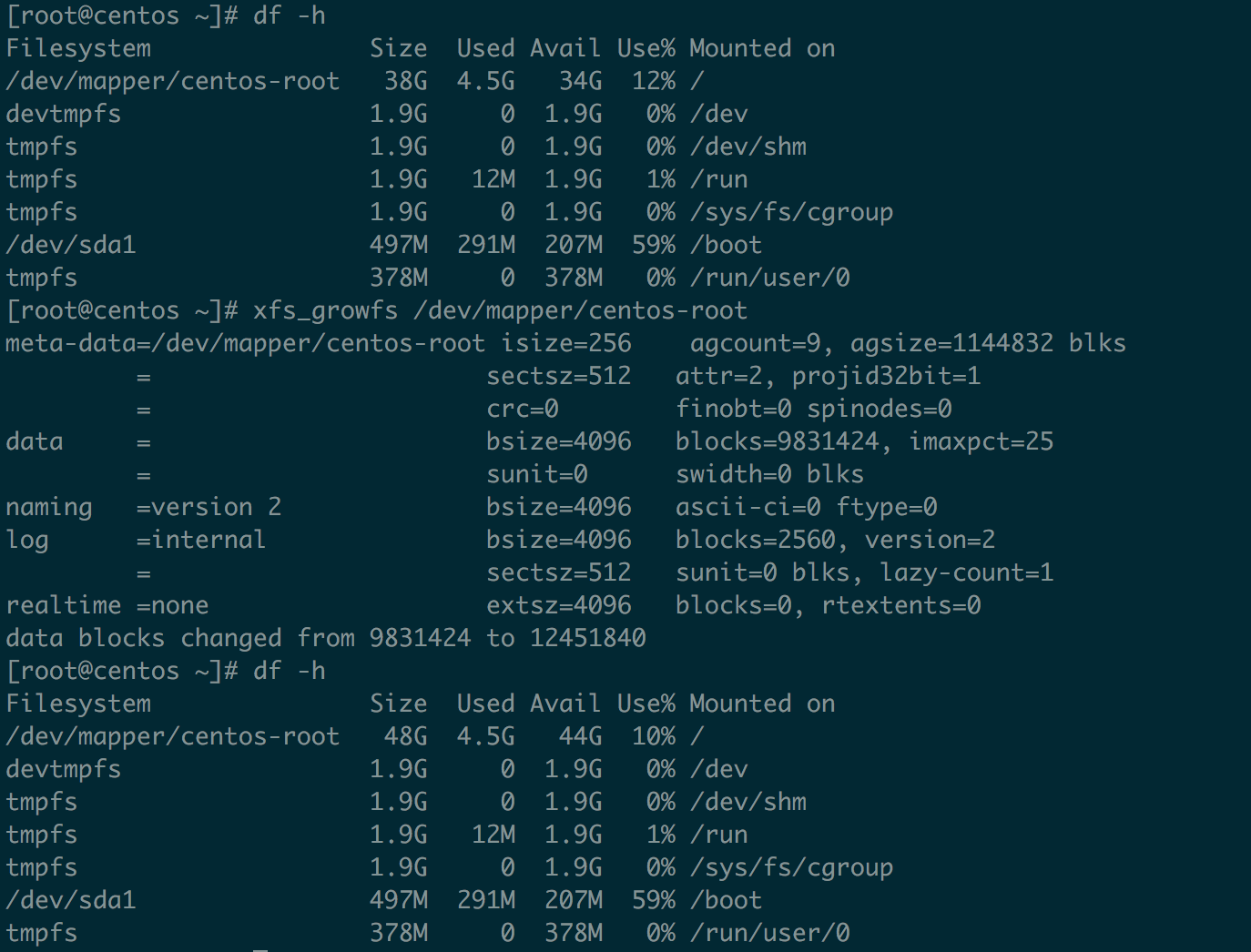

扩容前

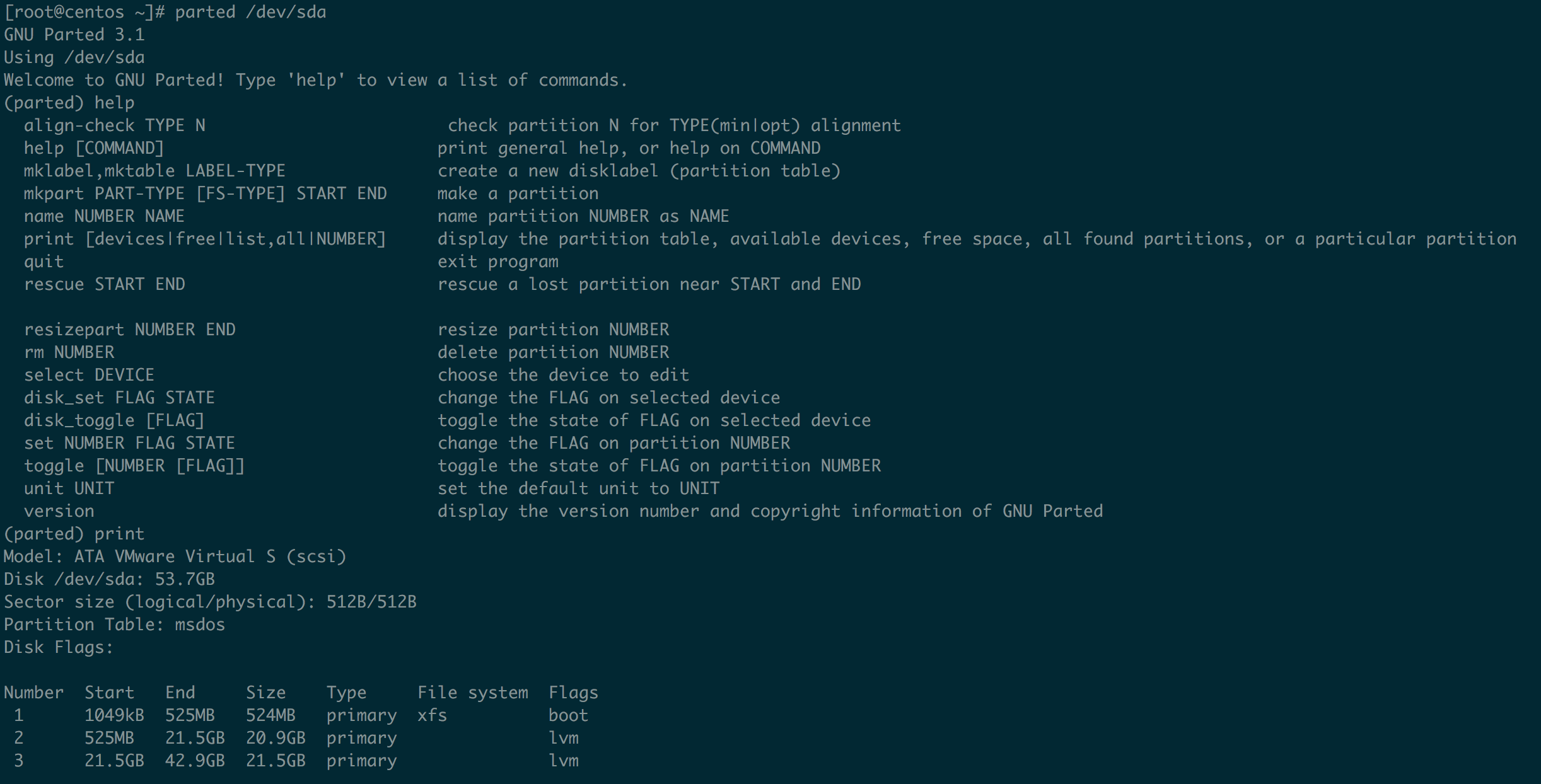

先看一下扩容前的样子

虚拟机有一个40G的硬盘,根分区挂在了centos-root的lv上,大小是37.5G

扩容

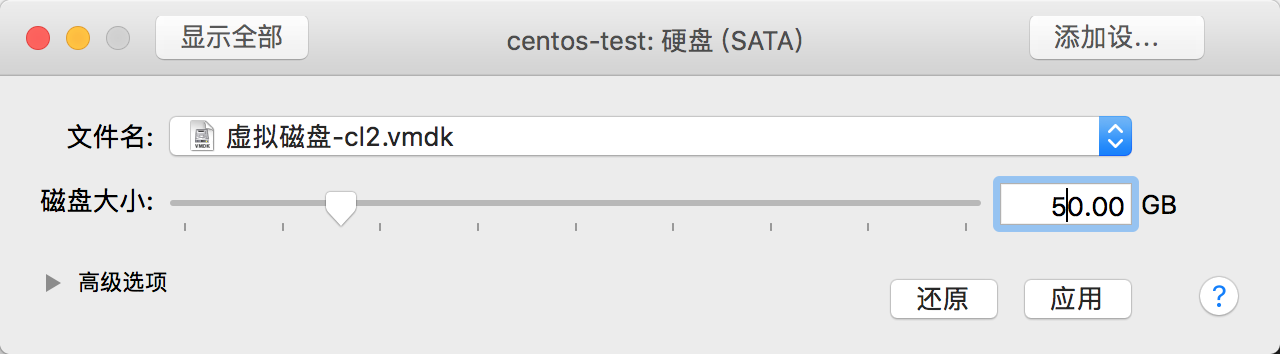

先关闭虚拟机,通过vmware软件来给虚拟机的硬盘扩容。

通过上面的步骤,磁盘的空间扩展到了50G

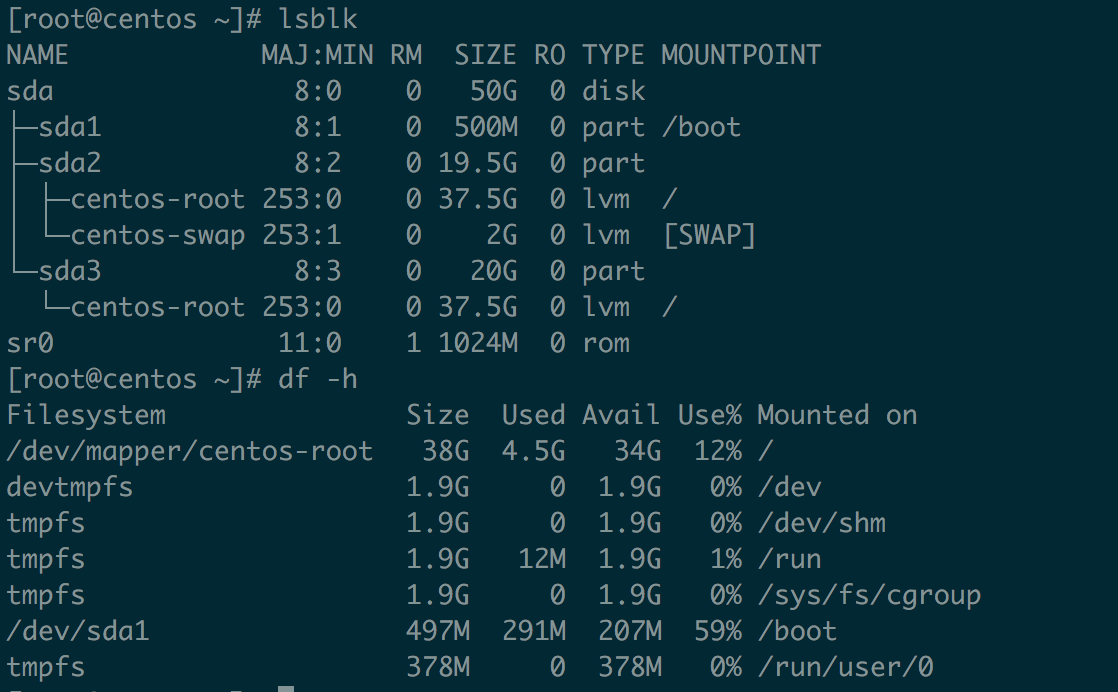

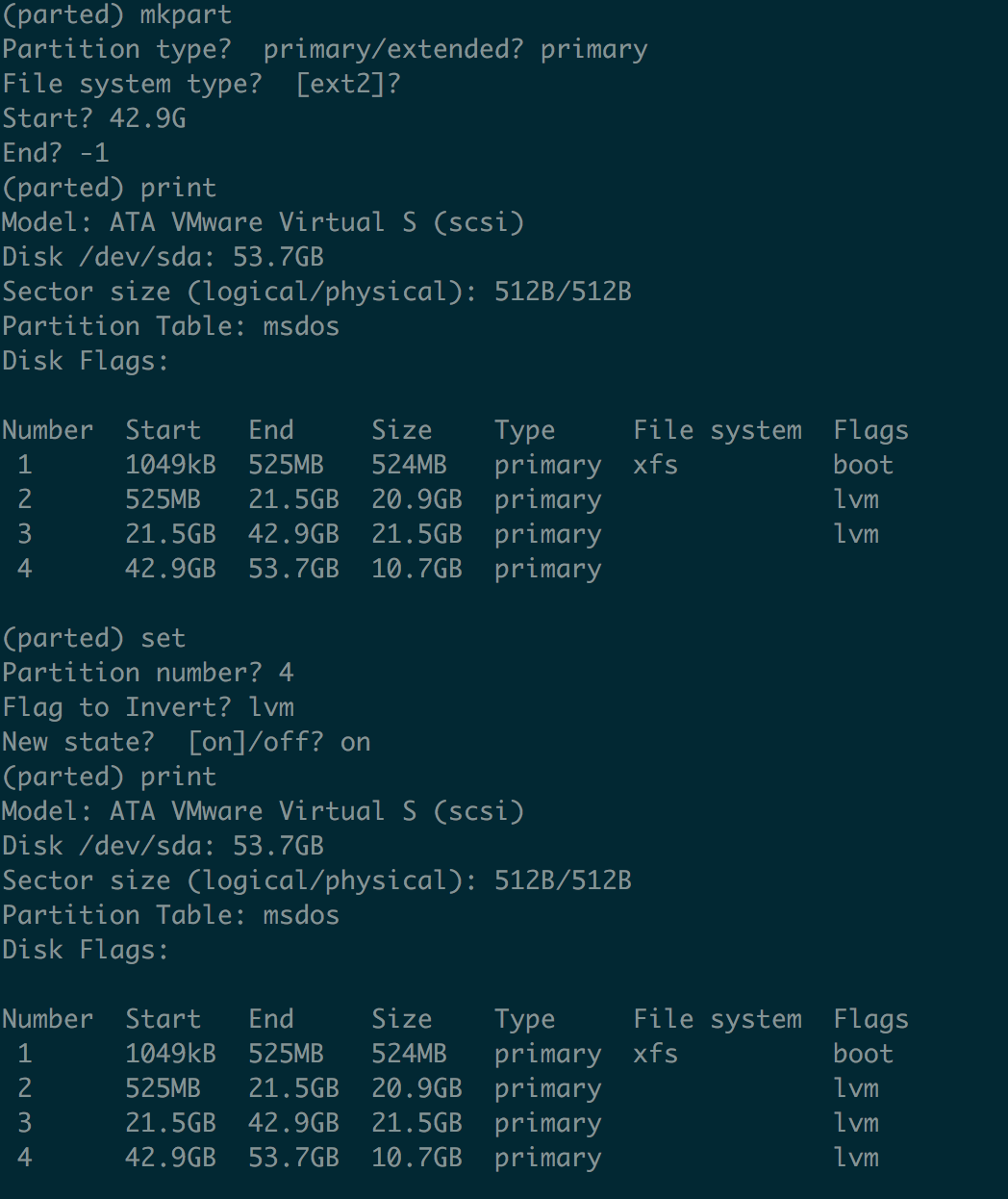

可以看到,磁盘的空间已经是50G,接下来通过parted命令,把剩余的10G空间做出lvm分区。

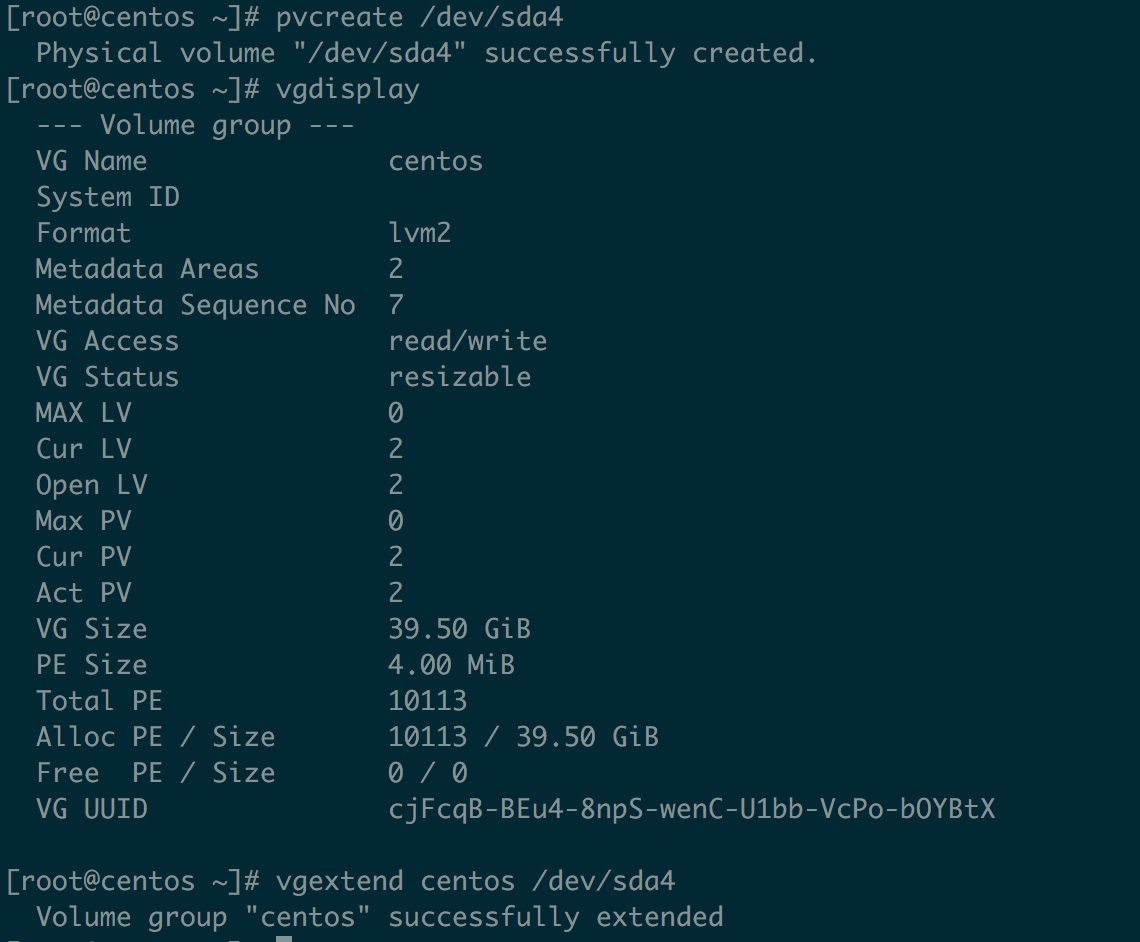

把新创建的分区作为PV,并且添加到VG中。

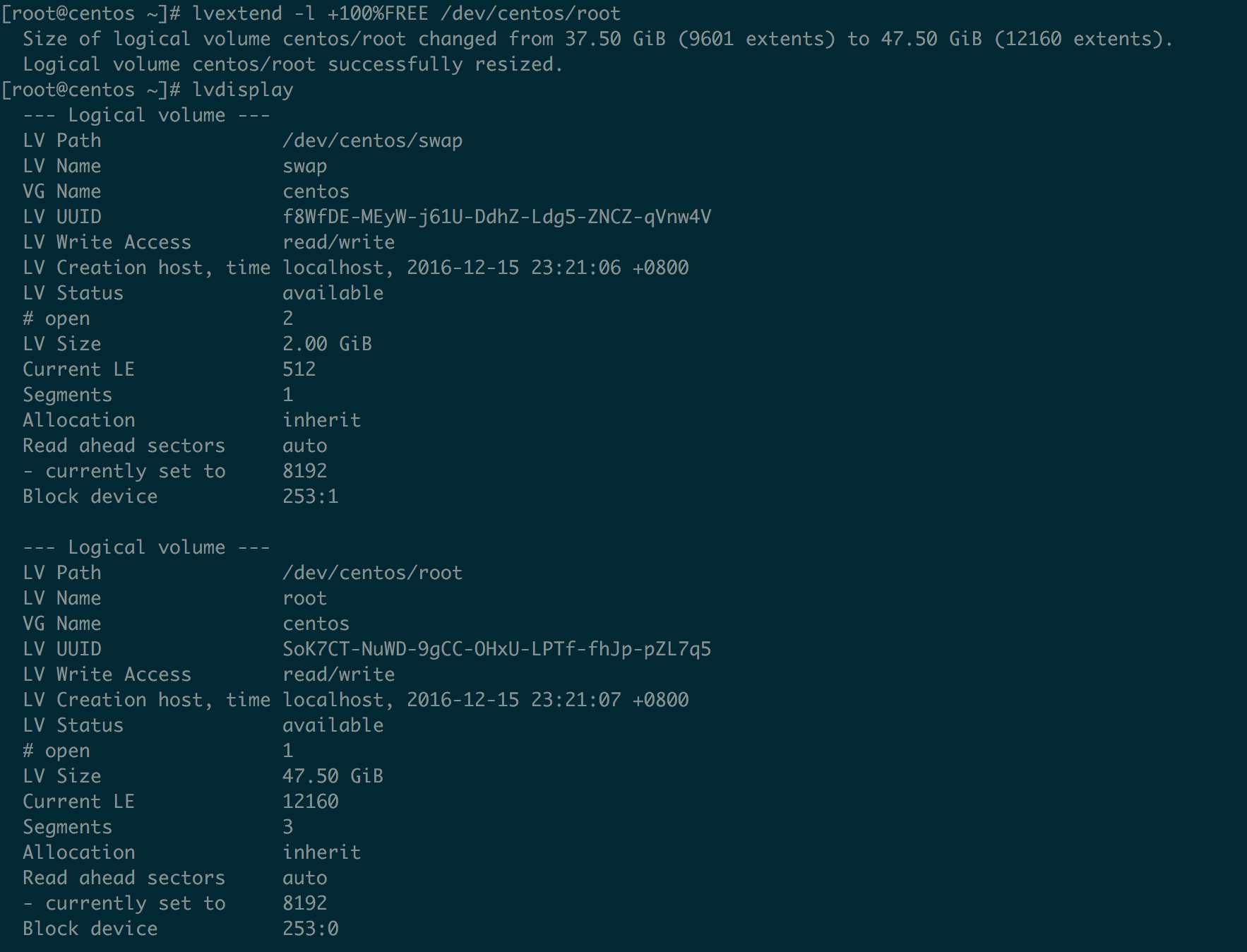

扩大LV的空间

通过xfs_growfs命令来动态调整xfs文件系统的容量

最终我们可以看到根分区的文件系统扩大了10G。

Ceph mgr启动restful插件

概述

本文主要介绍如何开启ceph mgr restful插件,并通过这个restful接口获取ceph的数据。

环境信息如下:

1 | [root@ceph11 ~]# ceph -s |

启动插件

1 | ceph mgr module enable restful |

发现restful服务并没有启动,8003端口没有监听,要启动restful服务,还需要配置SSL cetificate(证书)。

下面的命令生产自签名证书:

1 | ceph restful create-self-signed-cert |

这个时候可以查看在active的mgr节点(ceph14)上,restful服务已经启动

1 | [root@ceph14 ~]# netstat -nltp | grep 8003 |

默认情况下,当前active的ceph-mgr daemon将绑定主机上任何可用的IPv4或IPv6地址的8003端口

指定IP和PORT

1 | ceph config-key set mgr/restful/server_addr $IP |

如果没有配置IP,则restful将会监听全部ip

如果没有配置Port,则restful将会监听在8003端口

上面的配置是针对全部mgr的,如果要针对某个mgr的配置,需要在配置中指定相应的mgr的hostname

1 | ceph config-key set mgr/restful/$name/server_addr $IP |

创建用户

1 | [root@ceph14 ~]# ceph restful create-key admin01 |

后面的访问restful接口需要用到这个用户和密码

验证

启动restful插件后,可以通过浏览器进行访问并验证。

1 | https://192.168.180.138:8003/ |

获取全部存储池的信息

1 | https://192.168.180.138:8003/pool |

Python调用

可以通过requests来调用ceph mgr restful的接口,下面通过Python来获取全部存储池信息。

1 | #! /usr/bin/env python3 |

参考

SQL Join

现在有两张表,user和class,内容如下:

1 | MariaDB [jointest]> select * from user; |

inner join

1 | MariaDB [jointest]> select * from user inner join class on user.class_id=class.id; |

left join

1 | MariaDB [jointest]> select * from user left join class on user.class_id=class.id; |

right join

1 | MariaDB [jointest]> select * from user right join class on user.class_id=class.id; |

full join

mysql不知吃full join,不过可以通过union 合并left jion和right jion的结果来模拟full jion。

1 | MariaDB [jointest]> select * from user left join class on user.class_id=class.id |

cross join

user表一共有8条记录,class表一共有5条记录,cross join一同有8*5=40条结果。

1 | MariaDB [jointest]> select * from user cross join class; |

mysqldump

1 | -- MySQL dump 10.14 Distrib 5.5.60-MariaDB, for Linux (x86_64) |

参考

通过kubeadm搭建单节点k8s环境

本次实验,通过kubeadm来安装一个单节点的k8s环境。

本次实验是在虚拟机上进行,虚拟机的配置如下:

| OS | CPU | 内存 | IP |

|---|---|---|---|

| CentOS Linux release 7.6.1810 (Core) | 2 vCPU | 2G | 172.16.143.171 |

环境准备

安装docker

1 | [kube@kube ~]$ yum update |

关闭Swap

1 | swapoff -a |

安装k8s

配置kubernetes阿里云源

1 | cat <<EOF > /etc/yum.repos.d/kubernetes.repo |

关闭selinux

1 | setenforce 0 |

安装kubeadm, kubelet和kubectl

1 | yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes |

在centos系统上设置iptables

1 | cat <<EOF > /etc/sysctl.d/k8s.conf |

kubeadm默认会从k8s.gcr.io上下载kube的images,但是在国内环境是访问不了这些镜像的,所以可以从aliyun的registry上下载相应的image,然后修改tag,瞒过kubeadm。

先通过下面的命令查看当前需要哪些image

1 | [root@kube ~]# kubeadm config images list |

通过下面的方式可以从阿里云的registry上下载镜像并修改tag

1 | images=( |

初始化集群

1 | [root@kube ~]# kubeadm init |

从上面的结果可以看出k8s master已经初始化成功,k8s推进使用非root用户使用集群,所以下面我们创建一个kube的用户,并配置sudo权限。

1 | useradd kube |

通过visudo给kube用户配置sudo权限1

kube ALL=(ALL) ALL

下面的步骤把k8s的配置拷贝到用户的.kube目录下

1 | mkdir -p $HOME/.kube |

要使用k8s集群,还需要安装网络插件,k8s支持很多网络插件,比如calico,flannel,weave等,下面我们就安装weave网络插件。

配置Weave Net1

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

默认情况下,master node是不会运行容器的,由于本次实验只有一个节点,所以需要设置master node运行容器。

允许Master Node 运行容器1

2[kube@kube ~]$ kubectl taint nodes --all node-role.kubernetes.io/master-

node/kube untainted

这样一个简单的k8s集群就算搭建完成了,通过下面的命令可以看到当前集群中的节点,当前集群中运行的pod。

1 | [kube@kube ~]$ kubectl get node |

参考

通过ceph-deploy部署ceph

之前一直使用ceph-ansible来部署ceph,ceph-ansible在大规模部署的情况下比较合适,而且支持各种部署方式。

现在遇到的场景是是集群需要动态的调整,一开始是一个小规模的集群,后续需要动态增删服务来动态调整集群。

ceph-ansible并没有单独添加删除某个服务的脚本,并不适合这种情况;而ceph-deploy可以比较方便的支持服务的添加和删除,可以满足这种场景。

下面通过实验来验证ceph-deploy部署ceph集群的各种服务。

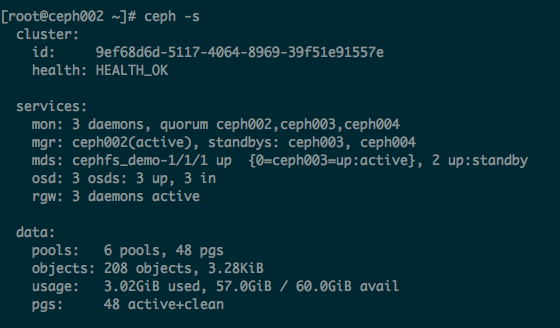

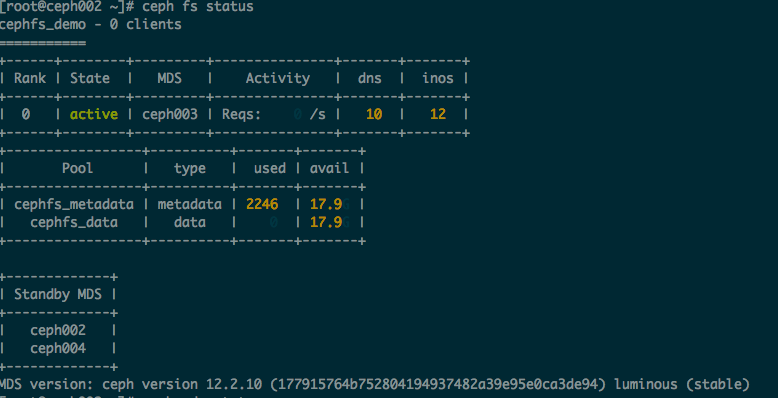

环境信息

本次实验共有4台虚拟机,具体信息如下

| Hostname | OS | Public network | Cluster network | Role |

|---|---|---|---|---|

| ceph001 | CentOS Linux release 7.5.1804 (Core) | 172.16.143.151 | 172.16.140.151 | ceph-deply |

| ceph002 | CentOS Linux release 7.5.1804 (Core) | 172.16.143.152 | 172.16.140.152 | mon osd mgr rgw mds |

| ceph003 | CentOS Linux release 7.5.1804 (Core) | 172.16.143.153 | 172.16.140.153 | mon osd mgr rgw mds |

| ceph004 | CentOS Linux release 7.5.1804 (Core) | 172.16.143.154 | 172.16.140.154 | mon osd mgr rgw mds |

配置

- 关闭防火墙和SELinux

- 配置ceph001到ceph002~4的免密登录

- 配置ceph的国内源

ceph源配置,本次实验安装的ceph版本是luminous

1 | [ceph_stable] |

安装

安装软件

在ceph001节点安装ceph-deploy,并且创建ceph集群

1 | yum install ceph-deploy -y |

当前目录下会生成ceph.conf和 ceph.mon.keying

ceph.conf

1 | [global] |

部署MON

1 | ceph-deploy mon create ceph002 ceph003 ceph004 |

收集key

1 | ceph-deploy gatherkeys ceph002 ceph003 ceph004 |

创建OSD

1 | ceph-deploy osd create ceph002 --data /dev/sdb |

部署MGR

1 | ceph-deploy mgr create ceph002 ceph003 ceph004 |

允许管理员执行ceph命令

1 | ceph-deploy admin ceph002 ceph003 ceph004 |

部署RGW

1 | ceph-deploy rgw create ceph002 ceph003 ceph004 |

部署MDS

1 | ceph-deploy mds create ceph002 ceph003 ceph004 |

1 | ceph osd pool create cephfs_metadata 8 8 |

截图

总结

ceph-deploy适合小规模集群的部署,并且可以满足集群的动态调整。

另外,当前版本的ceph-deploy已经使用ceph-volume替换ceph-disk。